Archive

SQL Server: How to fix error 7903

Recently I had to take care of the filestream corruption in SQL Server 2008 database, so I decided to share my experience. The error from DBCC CHECKDB was:

Msg 7903, Level 16, State 2, Line 1

Table error: The orphaned file “00005c8a-00006362-0001” was found in the FILESTREAM directory ID 9ae6ae63-bcc4-46f7-81cf-db99b01549e8 for object ID 903062353, index ID 1, partition ID 72057594125680640, column ID 11.

Explanation of the error 7903 is (from Cause and Resolution of Database Engine Errors):

“A FILESTREAM file was found in a FILESTREAM column directory; however, the corresponding column value in the partition is missing.”

This error cannot be repaired with CHECKDB so I decided to test what will happen if I physically delete mentioned filestream file. (You should take all user actions described in the document.)

I restored database to different server and executed DBCC CHECKDB again. It finished with the same error as I expected.

So I started to search for the document in the filestream directory of the database.

First I copied the file to another location (I wanted to have a copy of the file) and then I deleted it. When I executed DBCC CHECKDB again it finished without errors.

Deleting the orphaned file will resolve filestream consistency error 7903 (If you decide to delete the file be sure that you don’t need it.).

If you decide to do that on a production SQL Server, you do so entirely at your own risk.

Compare tables between 2 databases with Tablediff and PowerShell

Few days ago, I needed to compare tables and find all differences in data between 2 databases on different SQL Server instances. For that task I decided to use the Tablediff utility which is included with SQL Server.

Tablediff utility can be used for schema and row counts comparison or a row by row comparison of data between 2 tables. It can generate T-SQL script to fix differences between 2 tables. More information about tablediff utility you can find in BOL.

By default, tablediff utility can be found in the COM directory of SQL Server install folder.

For SQL Server 2012 default location is C:\Program Files\Microsoft SQL Server\110\COM\

For SQL Server 2008/2008 R2 default location is C:\Program Files\Microsoft SQL Server\100\COM\.

By default, tablediff is designed to compare 2 tables at time and will generate one .sql script file, and there is no way to do an automatic comparison on all tables between 2 databases with tablediff. To do that, I decided to create PowerShell script to run tablediff on all tables in database.

I use PowerShell version 3, with imported SQL Server 2012 SQLPS module. To demonstrate solution let’s create 2 databases with 2 tables (source and destination databases must have the same tables names).

CREATE DATABASE SourceDB; GO CREATE DATABASE DestinationDB; GO USE SourceDB; GO CREATE TABLE dbo.CompareTable (col1 int identity(1,1),col2 varchar(10)) GO INSERT INTO dbo.CompareTable (col2) SELECT 'test' UNION ALL SELECT 'test1' UNION ALL SELECT 'test2' GO CREATE TABLE dbo.CompareTable1 (col1 int identity(1,1),col2 varchar(10)) GO INSERT INTO dbo.CompareTable1 (col2) SELECT 'test' UNION ALL SELECT 'test1' UNION ALL SELECT 'test2' GO USE DestinationDB GO CREATE TABLE dbo.CompareTable(col1 int identity(1,1),col2 varchar(10)) GO INSERT INTO dbo.CompareTable (col2) SELECT 'test1' UNION ALL SELECT 'test2' GO CREATE TABLE dbo.CompareTable1 (col1 int identity(1,1),col2 varchar(10)) GO INSERT INTO dbo.CompareTable1 (col2) SELECT 'test' UNION ALL SELECT 'test1' UNION ALL SELECT 'test2' GO SELECT * FROM SourceDB.dbo.CompareTable SELECT * FROM SourceDB.dbo.CompareTable1 SELECT * FROM DestinationDB.dbo.CompareTable SELECT * FROM DestinationDB.dbo.CompareTable1 GO /* SourceDB.dbo.CompareTable col1 col2 ----------- ---------- 1 test 2 test1 3 test2 SourceDB.dbo.CompareTable1 col1 col2 ----------- ---------- 1 test 2 test1 3 test2 DestinationDB.dbo.CompareTable col1 col2 ----------- ---------- 1 test1 2 test2 DestinationDB.dbo.CompareTable1 col1 col2 ----------- ---------- 1 test 2 test1 3 test2 */

Now we have 2 databases with 2 tables and sample data. We see that data in CompareTable of SourceDB and DestinationDB aren’t the same.

To compare data in these tables with PowerShell we can run. (Before you run the command change the SourceServer and DestinationServer)

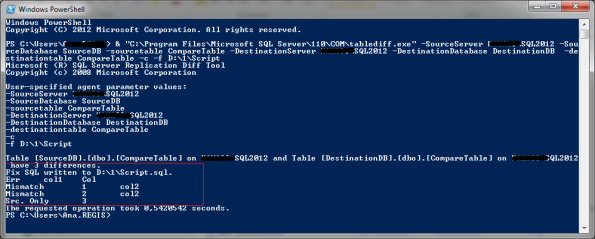

& "C:\Program Files\Microsoft SQL Server\110\COM\tablediff.exe" -SourceServer SERVER\SQL2012 -SourceDatabase SourceDB -sourcetable CompareTable -DestinationServer SERVER\SQL2012 -DestinationDatabase DestinationDB -destinationtable CompareTable -c -f D:\1\Script

We see 3 differences from result and Script.sql file is created to fix differences.

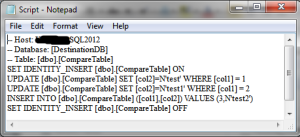

Content of Script.sql is:

That is simple example how to run tablediff to compare data in 2 tables and to generate .sql script to fix differences.

To compare data in all tables we need to loop through all tables in SourceDB and run tablediff for all tables. For every table we also need to set new file name for the T-SQL script file. (If file name specified in -f parameter exists tablediff will fail with error “The file %s already exists.”).

Here is the script:

# Date: 25/02/2013

# Author: Ana Mihalj

# Description: PS script to execute tablediff to compere and find all differences in data between 2 databases.

# Version: 1.0

# Example Execution: (with default parameters) .\TableDiffWithParam.ps1

# or with non-default parameters

# .\TableDiffWithParam.ps1 -SourceServer SERVER\INSTANCE -SourceDatabase SourceDB -DestinationServer SERVER\INSTANCE -DestinationDatabase DestinationDB -OutputFolder D:\Folder

param( [string]$SourceServer = "SERVER\INSTANCE",

[string]$SourceDatabase = "SourceDB",

[string]$DestinationServer = "SERVER\INSTANCE",

[string]$DestinationDatabase = "DestinationDB",

[string]$OutputFolder = "D:\Folder"

)

#set path to tablediff utility

$tablediff = "C:\Program Files\Microsoft SQL Server\110\COM\tablediff.exe"

#get tables for compare

if ($SourceServer.Contains("\") -eq "True")

{ $Tables = Get-ChildItem SQLSERVER:\SQL\$SourceServer\Databases\$SourceDatabase\Tables\ | SELECT name }

else

{ $Tables = Get-ChildItem SQLSERVER:\SQL\$SourceServer\Default\Databases\$SourceDatabase\Tables\ | SELECT name }

#create output folder if it does not exist

if ((Test-Path $OutputFolder) -eq $false)

{

md $OutputFolder

}

#Output file

$OutputFile = $OutputFolder+"\Output.txt"

#for each table

foreach($table in $Tables)

{

#create new file name for the Transact-SQL script file

$DiffScript = $OutputFolder+"\"+$table.Name+".sql"

#If file exists throw the errror.

if ((Test-Path $DiffScript) -eq $true)

{

throw "The file " + $DiffScript + " already exists."

}

#execute tablediff

& $tablediff -sourceserver $SourceServer -sourcedatabase $SourceDatabase -sourcetable $table.Name -destinationserver $DestinationServer -destinationdatabase $DestinationDatabase -destinationtable $table.Name -strict -c -o $OutputFile -f $DiffScript

# check the return value and throw an exception if needed

# tablediff return values: 0 - Success, 1 - Critical error, 2 - Table differences

if ($LastExitCode -eq 1)

{

throw "Error on table " + $table.Name + " with exit code $LastExitCode"

}

}

Before you run the script you need to change parameters value and the path to tablediff location (if your installation isn’t SQL Server 2012 on default installation folder).

I hope you’ll find this script useful.

How to remove “Server name” entry from “Connect to Server” dialog in SSMS

If you connect to a lot of different SQL servers with SQL Server Tools (SSMS, SQL Profiler etc.), the list of servers in “Connect to Server” dialog become large. Before SQL Server 2012 there was no way to delete individual server entry from the list or supported way to delete the list.

Some time ago, somewhere on the web I found a way how to delete the list of servers in “Connect to Server” dialog.

For SQL Server 2005 method is to close SSMS and then delete mru.dat file. On windows 2003 server file is located at %UserProfile%\Application Data\Microsoft\Microsoft SQL Server\90\Tools\Shell .

For SQL Server 2008/2008R2 method is to close SSMS and then delete SqlStudio.bin file. On windows 2003 server file is located at %UserProfile%\Application Data\Microsoft\Microsoft SQL Server\100\Tools\Shell. On Windows 7 and Windows 2008 server file is located at %UserProfile%\AppData\Roaming\Microsoft\Microsoft SQL Server\100\Tools\Shell.

A few days ago, I used the same method for SSMS 2012 by deleting SqlStudio.bin file from %UserProfile%\AppData\Roaming\Microsoft\SQL Server Management Studio\11.0.

But the problem in using these methods is that it’s unsupported by Microsoft and we lose user specific settings in SSMS.

Today I found that with SQL Server 2012 we have a supported way to delete server name from the list of servers in “Connect to Server” dialog by simply selecting the server name from the drop down list and then pressing the delete button.

It is documented in this blog post.

SQL Server 2012 RTM has released to manufacturing

Microsoft announced that SQL Server 2012 RTM has released to manufacturing and general availability is scheduled for 1 April 2012.

You can download an evaluation of the SQL Server 2012 RTM today.

Microsoft SQL Server 2012 Feature Pack is available here.

Today, don’t forget to attend the SQL Server 2012 Virtual Launch Event.

SQL Server 2012: Page restore from the SSMS UI

In my last blog post I wrote about various ways to detect damaged pages in the database and how to perform page restores in SQL Server 2005/2008. Same apply to SQL Server 2012. SQL Server 2008 doesn’t have UI support for page restore but SQL Server 2012 Management Studio (SSMS) brings us a nice UI support for page restore. If you prefer to do page restores with UI here are the steps:

-

In SSMS Object Explorer right click on database -> Tasks -> Restore then click on Page…

Restore Page window will be opened.

2. In Restore Page window, chosen database is selected (1) and Pages grid (2) is automatically populated with damaged pages from msdb.dbo.suspect_pages table (see picture below).

From Restore Page window you can run DBCC CHECKDB (3) to find if there are more damaged pages in the database or you can add additional pages if you want using Add (4) button. You also need to set the location for the log backup (5). Backup sets part (6) shows all backups that will be used to bring data pages up to date with the current log file.

3. Final step is to click OK button and damaged pages are restored. ![]()

SQL Server Page Restore

We all know how backup is important. I usually say that restore is more important than backup. We can create backup but if we don’t know how to restore it, or we cannot because it isn’t correct we have nothing. So always check your backups and restore it on different locations. In situation when you need to react quickly restore practice can save you a lot of times and nerves.

In this post I decided to show how to use page restore. Page restore isn’t something that has to be done on a regular basic, but every DBA should be familiar with technique and requirements in case page restore is needed.

Page restore requirements and limitations

Page restore is future available from SQL server 2005 version. Enterprise edition allows online page restore (restore of metadata pages require offline restore) while standard edition support only offline page restore.

Page restore is a nice future especially if you have a large database and a few damaged pages. As with all nice futures, page restore has some requirements and limitations. To be able to use page restore database must to be in full or bulk logged recovery model. Page restore must start with full, file or filegroup backup and there must be an unbroken chain of log backups up to the current log file. All log backups must be applied so that the page is brought up to date with the current log file.

Be aware that page restore cannot be used to restore: transaction log, full-text, allocation pages (GAM, SGAM, PFS, DIFF, ML), file header page (PageId =0 ), boot page (PageId =9).

How to identify damaged pages?

Damaged pages can be identified in multiple ways.

1. Sometimes, executing some queries on database can result in an error 824 (invalid page checksum error).

Msg 824, Level 24, State 2, Line 1

SQL Server detected a logical consistency-based I/O error: incorrect checksum (expected: 0xbf649245; actual: 0xbf648ec5). It occurred during a read of page (1:80) in database ID 9 at offset 0x000000000a0000 in file ‘D:\Microsoft SQL Server\MSSQL10_50.MSSQLSERVER\MSSQL\DATA\CorruptDB.mdf’. Additional messages in the SQL Server error log or system event log may provide more detail. This is a severe error condition that threatens database integrity and must be corrected immediately. Complete a full database consistency check (DBCC CHECKDB). This error can be caused by many factors; for more information, see SQL Server Books Online.

Analyzing the error we can see that database with databaseid = 9 has damaged page 80 on file 1 (page 1:80).

2. Damaged pages can be identified with DBCC CHECKDB command. Example results:

Msg 8928, Level 16, State 1, Line 2

Object ID 2105058535, index ID 0, partition ID 72057594038779904, alloc unit ID 72057594039828480 (type In-row data): Page (1:80) could not be processed. See other errors for details.

Msg 8939, Level 16, State 98, Line 2

Table error: Object ID 2105058535, index ID 0, partition ID 72057594038779904, alloc unit ID 72057594039828480 (type In-row data), page (1:80). Test (IS_OFF (BUF_IOERR, pBUF->bstat)) failed. Values are 12716041 and -4.

CHECKDB found 0 allocation errors and 2 consistency errors in table ‘CorruptTable’ (object ID 2105058535).

CHECKDB found 0 allocation errors and 2 consistency errors in database ‘CorruptDB’.

repair_allow_data_loss is the minimum repair level for the errors found by DBCC CHECKDB (CorruptDB).

3. dbo.suspect_pages table on msdb database. It contains one row per page that failed with an 823 error or an 824 error.

4. Check the SQL Server Error Log, and look for errors related to corrupted pages

How to perform page restore?

To perform page restore we need full backup containing un-damaged pages, differential and all transaction log backups to the current time. In the RESTORE DATABASE statement, we need to use the PAGE clause to list the page IDs of all of the pages to be restored. If we use offline page restore we first need to create backup log with NORECOVERY option. In this example, I will use online page restore.

--Restore database RESTORE DATABASE CorruptDB PAGE = '1:80' FROM DISK = 'D:\Backup\CorruptDB_full.bak' WITH NORECOVERY -- Restore log RESTORE LOG CorruptDB FROM DISK = 'D:\Backup\CorruptDB_log1.bak' WITH NORECOVERY /* Processed 1 pages for database 'CorruptDB', file 'CorruptDB' on file 1. RESTORE DATABASE ... FILE= successfully processed 1 pages in 0.023 seconds (0.339 MB/sec). Processed 0 pages for database 'CorruptDB', file 'CorruptDB' on file 1. RESTORE LOG successfully processed 0 pages in 0.002 seconds (0.000 MB/sec). */

In the example above, full backup with pageid = 80 is restored with option norecovery. Then we restored log with norecovery option. If we try to restore log with RECOVERY option without taking the last backup we would get an error:

Processed 0 pages for database ‘CorruptDB’, file ‘CorruptDB’ on file 1.

The roll forward start point is now at log sequence number (LSN) 43000000026100001. Additional roll forward past LSN 43000000042700001 is required to complete the restore sequence.

RESTORE LOG successfully processed 0 pages in 0.002 seconds (0.000 MB/sec).

You can query database and all data are available except damaged page. If we try to query data from table containing damaged page after we restored it with NORECOVERY option we will get an error:

ERROR:

Msg 829, Level 21, State 1, Line 1

Database ID 9, Page (1:80) is marked RestorePending, which may indicate disk corruption. To recover from this state, perform a restore.

-- Backup DB log BACKUP LOG CorruptDB TO DISK = 'D:\Backup\CorruptDB_log2.bak' -- Restore DB log with RECOVERY option RESTORE LOG CorruptDB FROM DISK = 'D:\Backup\CorruptDB_log2.bak' WITH RECOVERY

Now we can query our table without error.

Offline page restore procedure is slightly different. With offline restore we first need to take log backup with NORECOVERY option. It will put database to restoring state. Then we need to restore full database using PAGE clause and NORECOVERY option. Then differential and all transaction log backup need to be applied with NORECOVERY option. Last transaction backup, taken with NORECOVERY option, should be restored with RECOVERY option.

DBCC CHECKDB WITH ESTIMATEONLY: Do you trust it?

Yesterday I run into interesting situation with DBCC CHECKDB WITH ESTIMATEONLY command. Later I find out that it’s a known bug in SQL Server 2008 R2, but I experienced it for the first time and I think that it’s a good topic for my post. Here is the story.

Yesterday, after I restored database from the backup I decided to run DBCC CHECKDB on it. Data file size of tempdb database was 2.5GB. I know that DBCC CHECKDB is a resource-intensive task and it also require space in tempdb. I wanted to be sure that tempdb size is appropriate to accommodate the CHECKDB requirements so I run DBCC CHECKDB WITH ESTIMATEONLY.

DBCC CHECKDB(DatabaseName) WITH ESTIMATEONLY

Here is the output:

Estimated TEMPDB space needed for CHECKALLOC (KB): 40443

Estimated TEMPDB space needed for CHECKTABLES (KB): 13619

It’s ~ 53MB. I expected larger values because database occupies 28GB of space. But I thought that tempdb with 2.5GB of data file size is enough because there will be no other workload on the server.

I run DBCC CHECKDB(DatabaseName) and command completed successfully in about 11 minutes. Later I noticed that the size of tempdb data file is 3GB and data file auto growth occurred around the time of the DBCC CHECKDB execution.

That was very strange. Estimated tempdb space was about 53MB but it used more than 2.5GB of tempdb space. It is almost 50 times more than estimated space needed and it can lead to problem especially on very large databases. I decided to run DBCC CHECKDB command again and see usage of tempdb database.

Here is the script:

DECLARE @read BIGINT DECLARE @write BIGINT SELECT @read = SUM(fs.num_of_bytes_read), @write = SUM(fs.num_of_bytes_written) FROM tempdb.sys.database_files AS df INNER JOIN sys.dm_io_virtual_file_stats(2, NULL) AS fs ON fs.file_id = df.file_id WHERE df.type = 0 DBCC CHECKDB(DatabaseName) SELECT (SUM(fs.num_of_bytes_read) - @read) / 1024. / 1024. AS tempdb_read_MB, (SUM(fs.num_of_bytes_written) - @write) / 1024. / 1024. AS tempdb_write_MB, (SELECT internal_objects_alloc_page_count * 8. / 1024 FROM sys.dm_db_task_space_usage WHERE session_id = @@SPID) AS internal_objects_alloc_size_MB FROM tempdb.sys.database_files AS df JOIN sys.dm_io_virtual_file_stats(2, NULL) AS fs ON fs.file_id = df.file_id WHERE df.type = 0

Results:

tempdb_read_MB tempdb_write_MB internal_objects_alloc_size_MB

3192.21093750000 3192.60156250000 3194.562500

From results, we can see that more than 3.1GB of data are written and read during DBCC CHECKDB execution.

I executed few more tests with different databases to see a difference between estimated tempdb space and the real usage during CHECKDB operation and only first database had issue with it.

I googled and found the excellent blog post by Paul Randal (blog|twitter): How does DBCC CHECKDB WITH ESTIMATEONLY work? At the end of the post Paul wrote:

“PS Beware also that I’ve heard of several cases of a bug in SQL Server 2008 R2 where the output is incorrectly very low. The dev team is aware of this issue and are working on it.”

Be careful if you need to rely on DBCC CHECKDB WITH ESTIMATEONLY output. You can be surprised, especially if you are working with large or very large databases.

User-defined Server Roles in SQL Server 2012

In SQL Server 2008 R2 we can create database role, assign appropriate permission to it and then add users or groups as a member of database role. It works well for database level permissions. For server level permissions, we can only add user in one or more predefined fixed server roles or assign individual permissions to it. Usually, my users don’t need all permissions from the fixed server role and I like to add least privilege to my users, especially on server level, so I end up by giving individual server level permissions to individual users. That will change in SQL Server 2012.

User-defined server role is one of the new security enhancements in SQL Server 2012. It can be created and managed using the CREATE SERVER ROLE, ALTER SERVER ROLE and DROP SERVER ROLE commands, or we can use SSMS UI. Only server scope permission can be added to server roles. To see all built-in permissions that can be assigned to server role we can run:

SELECT * FROM sys.fn_builtin_permissions(default)

WHERE class_desc in ('SERVER', 'ENDPOINT','LOGIN','AVAILABILITY GROUP','SERVER ROLE')

ORDER BY class_desc

I decided to test new feature by creating server role for a performance checking. It should allow the user to do some server checking, running DMVs, reading definitions, running server side traces and extended event sessions. Next script will create server role PerfRole with required permissions and will add PerfLogin as a member of the role.

USE [master] GO CREATE SERVER ROLE [PerfRole] AUTHORIZATION [sa] GO GRANT CONNECT SQL TO [PerfRole] GRANT VIEW ANY DATABASE TO [PerfRole] GRANT VIEW ANY DEFINITION TO [PerfRole] GRANT VIEW SERVER STATE TO [PerfRole] GRANT ALTER ANY EVENT SESSION TO [PerfRole] GRANT ALTER TRACE TO [PerfRole] GO ALTER SERVER ROLE [PerfRole] ADD MEMBER [PerfLogin] GO

I log in as a PerfLogin and everything work fine. I can run DMVs, server side traces and SQL profiler. I can create and manage Extended Event session. I can see what was happening on the server without having to be in the sysadmin role or have individual permissions assigned to my login.

As you know, member of fixed server role can add members to the same role, but member of a user server role will not have permission to add members to the same role. Login must have, at least, ALTER permission on that role to add members to the role.

GRANT ALTER ON SERVER ROLE::[PerfRole] TO [PerfLogin] GO

If you want that all members of a user defined role can add members to the role you can give ALTER permission to the role.

GRANT ALTER ON SERVER ROLE::[PerfRole] TO [PerfRole] GO

User-defined server roles are nice addition to SQL Server 2012. These will simplify administration of instance-level rights and help increase the security of SQL Server instances.

UPDATE STATISTICS: How to cheat the optimizer

Did you ever need to see what execution plan will optimizer choose for your query on large set of data but you have a really small test database? Or you are testing your notification system for sort warning, for example, and you need to force query optimizer to think that tables used in a query are smaller? If your answer is yes, then keep reading.

Statistics is very important to the query optimizer for generating execution plans and it’s very important that statistics is up to date in a production database. To update statistics we use UPDATE STATISTICS statement. If you read BOL page for update statistics you can see that statement has some undocumented options. We can use these undocumented options, ROWCOUNT and PAGECOUNT, to cheat the optimizer. In the SQL Server Query Optimization Team blog, we can read that ROWCOUNT and PAGECOUNT alter the internal metadata of the specified table or index by overriding the counters containing the row and page counts of the object.

In the next example, I will show how to use ROWCOUNT and PAGECOUNT options and how to cheat the optimizer. You will see that internal metadata for the table are changed and that statistics isn’t updated. Never use this in production database.

To see how it works I will use Sales.SalesOrderDetail table in AdventureWorks2008R2 database.

First, let us see statistics for table’s primary key and information’s about rows and pages in the table from sys.partitions and sys.dm_db_partition_stats.

DBCC SHOW_STATISTICS ('Sales.SalesOrderDetail',PK_SalesOrderDetail_SalesOrderID_SalesOrderDetailID);

SELECT * FROM sys.partitions

WHERE object_id = object_id('Sales.SalesOrderDetail')

SELECT * FROM sys.dm_db_partition_stats

WHERE object_id = object_id('Sales.SalesOrderDetail')

Results:

DBCC SHOW_STATISTICS tell us (1) that Aug 2 2010 is the last time when statistics was updated and that table has 121.317 rows. Sys.partition (2) also tells us that table has 121.317 rows. sys.dm_db_partition_stats (3) shows us that 1.234 pages are used for storing in-row data.

Now, let’s UPDATE STATISTICS with ROWCOUNT and PAGECOUNT options. I set 500.000 rows and 10.000 pages but you can set any other value.

UPDATE STATISTICS Sales.SalesOrderDetail WITH ROWCOUNT = 500000,PAGECOUNT = 10000

After we updated statistics with ROWCOUNT and PAGECOUNT option let us see again what is written in statistics and in sys.partitions and sys.dm_db_partition_stats.

From (1) we can see that statistics isn’t updated. From (2) we can see that sys.partition has information that table has 500.000 rows and sys.dm_db_partition_stats thinks that table occupies 10.000 pages (3). These values are used by query optimizer during query processing.

Let’s run a simple query on Sales.SalesOrderDetail table and look at actual execution plan of the query.

SELECT SalesOrderID FROM Sales.SalesOrderDetail

Query is very simple. We want all SalesOrderID from the table. We can see that (1) actual number of rows is 121.371 but query optimizer thought (2) that there are 500.000 rows in the table.

Using ROWCOUNT and PAGECOUNT options allow us to cheat the query optimizer and to easily see what kind of query plan will be generated on large amount of data. You can also specify smaller number of rows and pages to simulate a sort warning for example.

How to change/reset SQL login password

Yesterday I saw a question about required permission for changing SQL Server login password and I decided to write a blog post about it. Question was: I have a SQL logins: Login1 and Login2. I gave ALTER LOGIN permission on Login1 to Login2 so that Login2 can change the password for Login1. But when I connect as Login2 and run ALTER LOGIN Login1 WITH password = ‘NewPassword’ an error occur. Any idea if this is a bug or by-design?

Before I answer the question let’s create a script to demonstrate the problem.

USE master; GO -- Create the login Login1 CREATE LOGIN Login1 WITH PASSWORD = 'Pa$$w0rd'; -- Create the login Login2 CREATE LOGIN Login2 WITH PASSWORD = 'Pa$$w0rd'; -- Grant the Login2 the required permissions to change Login1 password GRANT ALTER ON LOGIN::Login1 TO Login2; GO -- Set the execution context to Login2. EXECUTE AS LOGIN = 'Login2'; -- Reset password for the Login1 ALTER LOGIN Login1 WITH Password = 'Sec123';

An error occurs:

Msg 15151, Level 16, State 1, Line 1

Cannot alter the login ‘Login1’, because it does not exist or you do not have permission.

To understand required permission for changing SQL login password we need to understand the difference between password reset and password change. When you reset password for SQL login you only need to supply the new password, but when you change password for SQL login you must supply the new password and the old password. Required permission for resetting password is at least ALTER ANY LOGIN and for changing password required permission is at least ALTER LOGIN.

From a previous script, you can see that Login2 tries to reset password for Login1 but it has only permission to change the password. To change the password for Login1, Login2 must supply the new and the old password. Next example shows how to change the password for the login Login1.

-- Set the execution context to Login2. EXECUTE AS LOGIN = 'Login2'; -- Change the password for Login1 ALTER LOGIN Login1 WITH Password = 'Sec123' OLD_PASSWORD = 'Pa$$w0rd';

Now command executed successfully. If Login2 doesn’t know the old password for Login1, it must have at least ALTER ANY LOGIN permission to reset the password for Login1. But with ALTER ANY LOGIN permission, Login2 can reset password for all logins except for logins which are member of sysadmin fixed server role or a grantee of CONTROL SERVER permission. Next example shows how to give ALTER ANY LOGIN permission to Login2 and demonstrate password reset for the login Login1.

-- Grant the Login2 ALTER ANY LOGIN permission GRANT ALTER ANY LOGIN TO Login2; -- Set the execution context to Login2. EXECUTE AS LOGIN = 'Login2'; -- Reset password for Login1 ALTER LOGIN Login1 WITH Password = 'NewPa$$word';

Now I can answer to the question. 🙂 No, it’s not a bug. It’s a normal behavior of SQL Server. Same behavior also applies for resetting and changing password on Windows OS.

In this post, I showed the difference between resetting and changing the SQL login password. Login needs ALTER LOGIN permission to change the password. At least ALTER ANY LOGIN permission is required if login want to reset password.